On Synthetic Data: How It's Improving & Shaping LLMs

Synthetic data is making LLMs better – especially smaller ones.

Trainers are rephrasing input data, using larger “teacher models” to distill it from unruly webpages into structured Q&A or step-by-step, linear reasoning. They’re reshaping the content from a form written by humans, for humans, into a form that closely resembles chatbot conversation.

This has many benefits. Distilling content down, concentrating its knowledge, allows you produce smarter, smaller models. Extracting reasoning instructions teaches models how to build up evidence, enabling new “reasoning” models.

But synthetic data isn’t a silver bullet. The nature of synthetic data makes it better for some jobs and weaker for others, especially those that can’t be tested at scale. And an increasing reliance on synthetic data for training LLMs is making them better at quantitative tasks (like coding and math) but not delivering similar results for other use cases.

Synthetic data is helping LLMs scale the data wall, but it’s doing so while creating a growing perception gap between those who use LLMs for quantitative tasks and those who use it for anything else, generating significant confusion.

This post is a primer on synthetic data and how it’s being used to build better LLMs. We’ll cover what it is, how it’s made, and how it’s improving and shaping LLMs.

Sections

What is Synthetic Data?

There isn’t a great primer on synthetic data and how it’s used in AI today. Even Ilya, when discussing what comes after the “pre-training era”, remarked, “But what does synthetic data mean? Figuring this out is a big challenge?”

Synthetic data is artificially generated data, as opposed to data captured from real-world events. To many, this sounds suspect: how can we create valuable data by just making it up? Well, we do so by using authentic data (often referred to as “seed data”) to establish the qualities of a dataset, which we then use as rules to guide the creation of synthetic data.

Initially, synthetic data was used to preserve privacy and confidentiality. Researchers want to analyze sensitive data that can’t be shared – raw Census responses, health records, financial transactions – so statistical models are built from the sensitive data capable of generating fully synthetic output. The new dataset, “has the same mathematical properties as the real-world data set it’s standing in for, but doesn’t contain any of the same information.”

Let’s start with a simple example: a company creating a CRM system wants to test a phone number detection system, but is unable to run the software on their customers’ data due to privacy and security restrictions. So developers review a sample of publicly available, human-entered phone numbers, note the patterns they contain, and write a small function to generate as many phone numbers as they need:

def generate_us_phone_number():

"""

Generates a synthetic, human-input-style US phone number

Returns:

str: A random US phone number in a common human-input format.

"""

formats = [

"({area_code}) {prefix}-{line_number}", # e.g., (123) 456-7890

"{area_code}-{prefix}-{line_number}", # e.g., 123-456-7890

"{area_code}{prefix}{line_number}", # e.g., 1234567890

"{area_code} {prefix}-{line_number}", # e.g., 123 456-7890

]

area_code = f"{random.randint(200, 999)}"

prefix = f"{random.randint(200, 999)}"

line_number = f"{random.randint(1000, 9999)}"

format_choice = random.choice(formats)

return format_choice.format(area_code=area_code, prefix=prefix, line_number=line_number)

There’s little complexity here, but this function produces synthetic data allowing for privacy-safe testing. By observing patterns in the seed data, the developers developed a mechanism for randomly generating phone numbers within the patterns of observed reality.

These functions –– and the statistical models capturing the shape of the seed data –– can get much more complex. For example, Microsoft used a computer graphics pipeline to generate 1 million synthetic images of human faces.

While Microsoft’s Digiface dataset is valuable for its privacy-preserving qualities, it demonstrates several additional benefits of synthetic datasets:

- Synthetic datasets have clean, consistent, always-present labels. Images gathered from the web frequently lack labels or sport incorrect labels. To quote Microsoft’s paper: “For example, the Labeled Faces in the Wild dataset contains several known errors, including: mislabeled images, distinct persons with the same name labeled as the same person, and the same person that goes by different names labeled as different persons.” This isn’t a problem with the DigiFace dataset, which uses the same software to generate and assign labels to faces.

- Synthetic datasets have unlimited scale. The Digiface dataset picked 1 million faces arbitrarily; it could have kept running. Even our toy Python function can generate all the numbers we’d ever need (on my machine it took less than 2 seconds to generate 1 million phone numbers). Collecting authentic datasets takes time, money, and gets harder as you go.

- Synthetic datasets reduce bias with breadth of coverage. Image datasets collected from public images tend to skew towards people more likely to have their picture taken, namely celebrities. And, “celebrity faces also have imbalanced racial distribution.” Further, these images are more likely to be better lit, with the person wearing makeup, and of high clarity. With synthetic datasets, this isn’t a problem. We can generate a wide array of people, distributed across all parameters.

- Synthetic datasets reduce bias with depth of coverage. Public image sets skew towards poses and facial expressions people are likely to make when their photo is being taken. We aren’t usually smiling, but you wouldn’t know that from our pictures. With synthetic data, “we can render multiple images by varying the pose, expression, environment (lighting and background), and camera.”

DigiFace is split into two distributions –– one with 720k images from 10k distinct faces (72 images per identity) and one with 500k images from 100k identities. The consistency, coverage, and scale of this synthetic facial dataset allowed for training competitive facial recognition models, with only a small dataset of actual faces.

How is Synthetic Data Improving LLMs?

Using computer graphics to synthesize faces and small functions to generate phone numbers isn’t particularly perilous. Both programs have enough knowledge of their domains to prevent the creation of egregiously wrong data.

But when it comes to synthesizing text content for general models, the difficulty level scales up. In the same way we broke down the fundamental components of a US phone number (area code, prefix, line number) we could break down the fundamental components of most sentences (subject, verb, action) and paragraphs (topic sentence, supporting sentence, concluding sentence), but using that structure as guardrails to generate content would synthesize a whole bunch of nonsense. It’s okay if our phone number components are literally random. Using the same approach to text does not work.

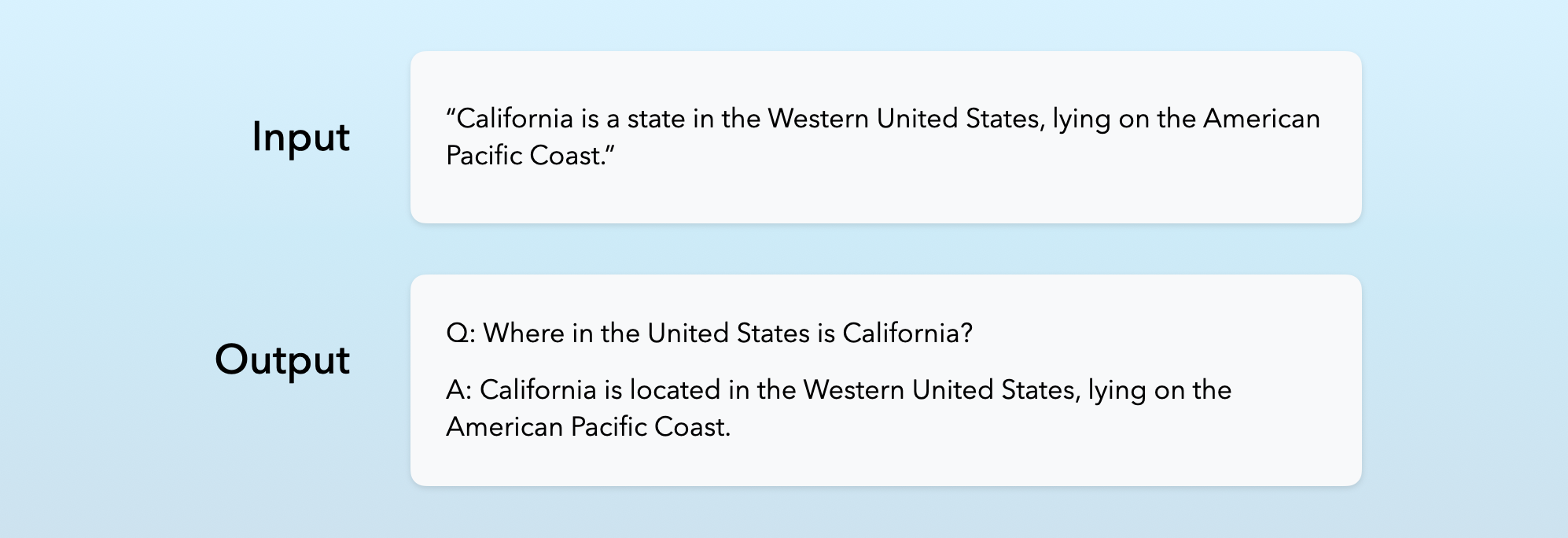

Due to this, when training LLMs synthetic data is mostly used for rephrasing text content. The underlying content or knowledge remains the same, but how it’s expressed is shifted. For example, let’s provide Claude with the following prompt:

Rewrite the following sentence 3 different ways with an increasingly casual style: "The San Francisco Bay Area, commonly known as the Bay Area, is a region of California surrounding and including San Francisco Bay, and anchored by the cities of San Francisco, Oakland, and San Jose."

This yields:

1. The San Francisco Bay Area—or just "the Bay Area" as it's widely known—refers to the California region that includes San Francisco Bay and is home to the major cities of San Francisco, Oakland, and San Jose.

2. The Bay Area is what people call the part of California that wraps around San Francisco Bay, with San Francisco, Oakland, and San Jose being the main cities that anchor the whole region.

3. So basically the Bay Area is just all the stuff around SF Bay - you've got San Francisco, Oakland, and San Jose as the main spots, and that's pretty much what everyone means when they talk about it.

This is a toy example, but illustrates the general pattern. We obtain some seed data (our original sentence), a teacher model (the LLM doing the rephrasing work, here Claude 3.5 Sonnet), and provide it with instructions detailing how we want the seed rephrased (our prompt).

But why might we want to rephrase or reorganize our content? There are a few reasons…

Rephrasing Content to the Match Expected Interactions

If we rephrase our content so it more closely resembles our ultimate, conversational interaction with users we can train on less data, improve the capabilities of our models, and spend much less time on post-training. This technique is laid out by researchers at Apple and CMU, in their paper, “Rephrasing the Web.”

Originally defined, LLMs were text completion models: they take an input bit of text and spit out more text they’d predict might follow, based on their weights. OpenAI’s GPT-3 is a completion model, and by fine-tuning this model with human teachers providing example Q&A content they created ChatGPT, a conversational or chat model. Since then, models usually ship with a chat and instruct build, though some still ship completion versions.

The “Rephrasing the Web” paper suggests rephrasing text data in a Q&A format, partially so we can skip the post-training step where we teach completion models to converse. The team used Mistral 7B as a teacher model, prompting it to rephrase text in four different styles:

- “Easy (text that even a toddler will understand)”

- “Medium (in high quality English such as that found on Wikipedia)

- “Hard (in terse and abstruse language)”

- “Q/A (in conversation question-answering format)

Content from the C4 web content dataset was rephrased, in a sense distilled down to only content the ultimate model would likely receive or produce.

This not only resulted in 3x faster training – since there was less training data to process – but better models. The authors write, “re-phrasing documents on the web using an off-the-shelf medium size LLM allows models to learn much more efficiently than learning from raw text on the web, and accounts for performance gains on out of distribution datasets that can not be offset with additional web data.” In other words, rephrasing can yield gains that make up for a lack of web data.

Further, this rephrasing lets you skip much of post-training chat training: “Using synthetic data enables baking in desirable attributes such as fairness, bias, and style (like instruction following) directly into the data, eliminating the need to adjust the training algorithm specifically.” If we can use a model to reformat our data into Q&A form to start we don’t have to fine-tune the model afterwards.

In many ways this is a UX improvement. Translating pre-training data into Q&A or Wikipedia-style writing induces better formatting, correctness, and efficiency. But it doesn’t add new knowledge.

In fact, this translating data step – which I think is better thought of as “distillation” – is lossy. It removes data, while seeking to preserve the best knowledge. The Apple and CMU team observed this: their initial model would often fail when people provided input with linguistic errors or typos. To mitigate this, they trained the model on half rephrased data and half authentic web data, adding “noise” to capture the sloppy way humans communicate.

Synthetic data can make smaller models more efficient and effective by rephrasing data to match expected interactions. But because the initial data is merely getting distilled, no new knowledge is being added. With reasoning models showing promising results,

Reorganizing Content into Linear, Step-By-Step Reasoning

With reasoning models showing promising results, might we improve our data distillation process by extracting explicit reasoning steps? This is exactly the approach the Microsoft team behind Phi-4 used and it resulted in one hell of a model.

People don’t usually write in a structured, step-by-step linear fashion. They write more casually or even start with their conclusion before enumerating their support. This is at odds with the linear way LLMs process data. The Phi-4 team writes:

In organic datasets the relationship between tokens is often complex and indrect. Many reasoning steps may be required to connect the current token to the next, making it challenging for the model to learn effectively from next-token prediction… A simple example to illustrate this is that a human-written solution to a math problem might start with the final answer. This answer is much too hard to output immediately, for either a human or an LLM—the human produced it by nonlinear editing, but pretraining expects the LLM to learn to produce it linearly. Synthetic solutions to math problems will not have such roadblocks.

Rephrasing content as linear, step-by-step reasoning – matching the way LLMs generate tokens – might increase LLM efficiency and performance.

The first challenge for the Phi-4 team was identifying authentic seed data capable of having its reasoning extracted.

They created question datasets from sources like Quora, AMAs on Reddit, or the questions LinkedIn prompts you to answer. High-quality content demonstrating, “complexity, reasoning depth, and education value,” was selected from web pages, books, scientific papers, and code. Much of Microsoft’s previous Phi work dealt with this filtering methodology. In some cases, these high-quality selections were rephrased into Q&A content, similar to the previous example. All of this went into their seed pile.

Using GPT-4o as a teacher, the team transformed the seeds into synthetic data, “through multi-step prompting workflows..rewriting most of the useful content in given passages into exercises, discussions, or structured reasoning tasks.” This synthetic dataset made up 40% of Phi-4’s training data, with more direct web rephrasing making up an additional 15%. More than half of Phi-4’s pre-training corpus is synthetic!

This process delivered astounding results: on graduate-level STEM and math evaluations Phi-4 outperforms its teacher, GPT-4o. This is particularly impressive because Phi-4 contains only 14 billion parameters (GPT-4o likely has hundreds of billions). A 16gb verion of Phi-4 runs at ~20 tokens a second on my Mac Studio, fast enough for most use cases, and generates really good code.

Unquestionably this is LLM progress! The Phi team combines the rephrasing learnings with reasoning extraction techniques to generate synthetic data that produces a better model.

But it’s not a silver bullet. This translation does not replace the old scaling laws, that more data equals smarter models.

First off, these techniques only work for smaller models. This process relies on the presence of larger teacher models, like GPT-4o. The synthetic data techniques we’ve discussed so far distill knowledge from data. They do not add new information. This primarily helps create smaller models, as it reduces the size of the input data. The Phi-4 team calls out this nuance, as have other teams. The Llama 3 paper notes:

Models show significant performance improvements when trained on data generated by a larger, more competent model. However, our initial experiments revealed that training Llama 3 405B on its own generated data is not helpful (and can even degrade performance).

Synthetic data improves smaller models by leveraging large ones. The ceiling of LLM capabilities will likely rise slowly while small models continue to race forward. We’re left with a key question: who teaches the teachers?

Another weakness of this process is that distillation is a lossy. We can try our best to keep the most pertinent information, but we cannot prevent loss. While rephrasing improves the “reasoning” and “understanding” of these small models, we cannot get around the fact that fewer parameters hold less facts. “While phi-4 achieves similar level of language understanding and reasoning ability as much larger models, it is still fundamentally limited by its size for certain tasks, specifically in hallucinations around factual knowledge.”

Small models aren’t good knowledge banks.

We saw this pretty clearly in my piece exploring DSPy: when asking the 1 billion parameter Llama a question about a specific person resulted in hallucinations, while the 70 billion parameter Llama recognized the individual perfectly.

Finally, these synthetic data methods tend to overfit to an interaction paradigm. The Apple and CMU team had to mix in organic data to allow the model to understand messy, real-world interactions. The Phi-4 team suffered similar challenges: “As our data contains a lot of chain-of-thought examples, phi-4 sometimes gives long elaborate answers even for simple problems—this might make user interactions tedious.”

This effect can also be seen if you ask Phi-4 to output in a specific format. It’s not good at solely outputting JSON, bulleted structures, tabular data, stylistic requests ant other formats that aren’t the reasoning style it was trained on. When testing DSPy I tried Phi-3.5 (which shares this characteristic with Phi-4) before I tried Llama: it failed to work with DSPy at all, overloading DSPy’s attempts to coax a specifically formatted output.

While this quality is a noted weakness of Phi-4, I think it is better seen as a sign that these small models will become more specialized. “Chat”, “Instruct”, or “Code” variants will multiply into further specialties. Phi-4 is kicking this off, as it’s not quite a chat model and not quite an instruct model: “While phi-4 can function as a chat bot, it has been fine-tuned to maximize performance on single-turn queries.”

The rephrasing and reasoning extraction techniques we’ve described so far only distill. They concentrate knowledge from the data we already have, producing better, faster, smaller models.

But is there a way to synthesize new knowledge?

Synthesizing New Code

LLMs are getting better at programming because we can quantitatively test synthetic code. As noted above, we are limited when we create synthetic text data. We can’t randomly generate content according to a defined structure; we’re only able to rephrase and extract knowledge from existing data.

But this is not so with quantitative subjects like code! Teams have been generating wholly synthetic code, further from the input seed data, since at least Llama 3. The team at Meta ran the following process:

- Generate a large collection of programming problems: Collect a ton of organic code snippets from your seed dataset, spanning a diverse range of topics. Present these to a teacher model and ask it to generate programming problems that could be answered by these examples.

- Generate solutions for the problems: Prompt a large model to generate code which answer your problems, many times and in many different programming languages.

- Evaluate the solutions: Run all the generated solutions through a linter (a tool that finds potential errors and bugs) and a parser to ensure the code runs without errors. Throw out all the potential solutions that fail. For the remaining solutions, use a model to write unit tests for the problem to ensure the result of the executable code outputs a desired result.

- Try to Correct the Errors: Send the failing solutions back through the large LLM, noting their bugs or failures, and ask for a rewrite. The Llama team found that 20% of the incorrect solutions were corrected with one additional try.

This process, combined with more rudimentary language translation, generated the majority of the synthetic data used to train Llama 3. The Phi-4 team performed a similar process as well, applying validation to all quantitative synthetic data they could test.

This process works: Phi-4 scores higher on coding benchmarks, “than any other open-weight model we benchmark against, including much larger Llama models.”

I find this ridiculously fascinating. These compound AI systems for generating new knowledge from seed data are ingenious and effective. LLMs, especially small models, have gotten incredible at code over the last two years. And while programming most naturally fits this approach, we might apply this problem-solution-check-correct pattern to any quantitative task.

A Growing Reliance on Synthetic Data Creates a Perception Gap

Spend some time reading technical papers for new models and you’ll notice a theme: a good chunk of the content deals with quantitative problems. Math and code are the focus right now, with new and complex synthetic data pipelines refashioning seed data and testing the results. The headline evaluations are quantitative tests, like MATH and HumanEval. Synthetic data is pushing models further and delivering improvements, especially in areas where synthetic data can be generated and tested.

There are additional sources of data that can help mitigate this bias. Proprietary user-generated data – like your interactions with Claude or ChatGPT – provide human signal and qualitative rankings. Hired AI trainers will continue to generate feedback that will tune and guide future models, but all of this relies on humans, which are slower, more expensive, and more inconsistent than synthetic data generation methods.

We will pick off testable use cases from qualitative domains – RAG performance, entity and snippet extraction from long texts – but most of the field will remain only accessible to distillation methods, notably rephrasing.

Synthetic data is a tool for scaling the data wall, but it’s lopsided. For non-quantifiable fields, it can only distill signals from existing data. It cannot create new knowledge.

As a result, we can expect the following:

- Inference is now part of training: Building new models will require incredible amounts of inference, not just training. Multistep pipelines for generating synthetic data rely on teacher models to create the mountains of signal needed to push models forward.

- Smaller models will get better faster: Smaller models will continue to improve while large models will progress slowly, as synthetic data techniques rely on larger, more capable teacher models. SemiAnalysis – in an informative piece arguing against an LLM slowdown – claims this dynamic is behind the delayed release of Claude 3.5 Opus: “Anthropic used Claude 3.5 Opus to generate synthetic data and for reward modeling to improve Claude 3.5 Sonnet significantly, alongside user data. Inference costs did not change drastically, but the model’s performance did. Why release 3.5 Opus when, on a cost basis, it does not make economic sense to do so?”

- Models will get better at testable skills: Quantitive domains – like programming and math – will continue to improve because we can create more novel, massive, synthetic datasets thanks to unit tests and other validation methods. Qualitative chops and knowledge bank capabilities will be more difficult to address with synthetic data techniques and will suffer from a lack of new organic data.

- An AI perception gap will emerge: Those who use AIs for programming will have a remarkably different view of AI than those who do not. The more your domain overlaps with testable synthetic data, the more you will find AIs useful as an intern. This perception gap will cloud our discussions.

As Ilya said in his talk, we’ll need new methods to push past the data wall. Synthetic data is an incredible tool, but it isn’t a silver bullet.