A Gentle Intro to Running a Local LLM

How to Run a Chatbot on Your Laptop (and Why You Should)

Open, small LLMs have gotten really good. Good enough that more people – especially non-technical people – should be running models locally. Doing so not only provides you with an offline, privacy-safe, helpful chatbot; it also helps you learn how LLMs work and appreciate the diversity of models being built.

Following the LLM field is complicated. There are plenty of metrics you can judge a model on and plenty of types of models that aren’t easily comparable to one another. Further, the metrics that enthusiasts and analysts evaluate models might not matter to you.

But there is an overarching story across the field: LLMs are getting smarter and more efficient.

And while we continually hear about LLMs getting smarter, before the DeepSeek kerfuffle we didn’t hear so much about improvements in model efficiency. But models have been getting steadily more efficient, for years now. Those who keep tabs on these smaller models know that DeepSeek wasn’t a step-change anomaly, but an incremental step in an ongoing narrative.

These open models are now good enough that you – yes, you – can run a useful, private model for free on your own computer. And I’ll walk you through it.

Sections

Installing Software to Run Models

Large language models are, in a nutshell, a collection of probabilities. When you download a model, that’s what you get: a file(s) full of numbers. To use the model you need software to perform inference: inputting your text into a model and generating output in response to it. There are many options here, but we’re going to pick a simple, free, cross-platform app called Jan.

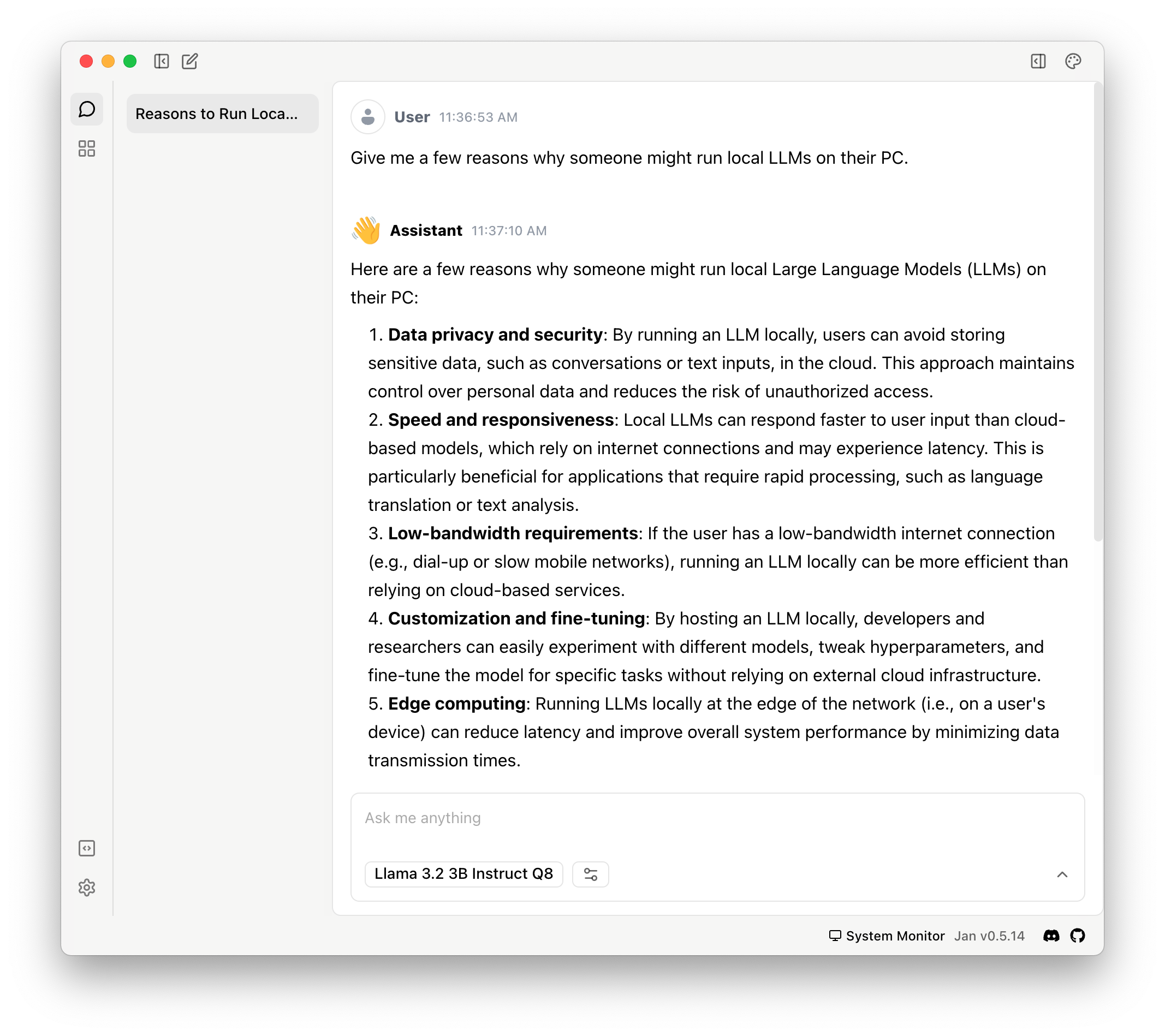

Jan is an open-source piece of software that manages and runs models on your machine and talks to hosted models (like the OpenAI or Anthropic APIs). If you’ve used ChatGPT or Claude, its interface will feel familiar:

Go download Jan and come back when it’s installed.

In the screenshot above we’re in the chat tab (see that speech bubble icon in the upper left?) Before we can get started, we’ll need to download a model. Click the “four square” icon under the speech bubble and go to the model “hub”.

Making Sense of Models

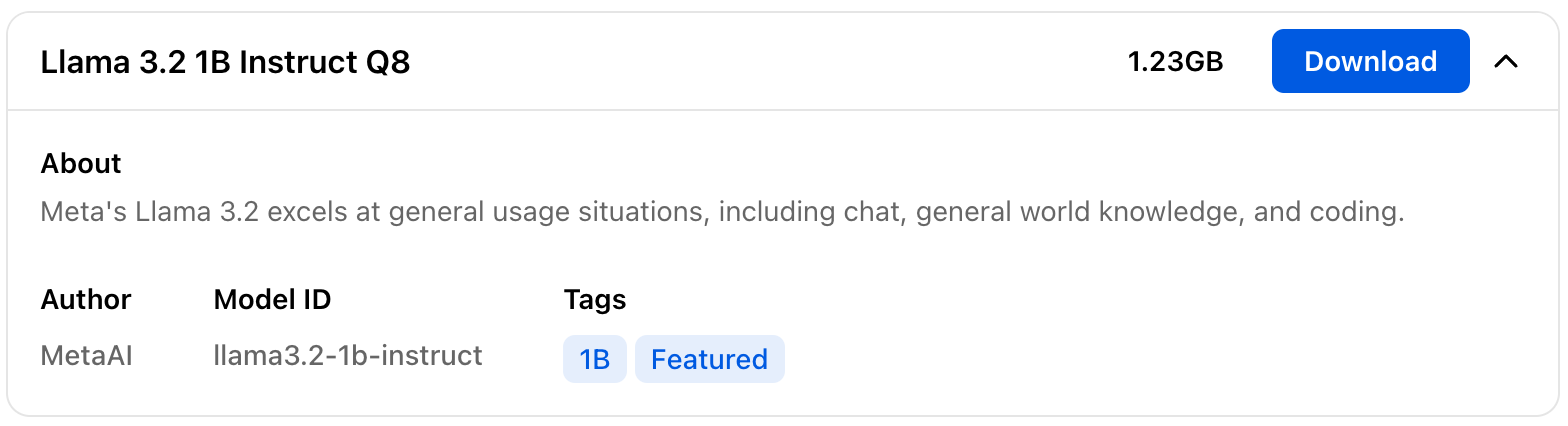

In Jan’s model hub we’re presented with a list of models we can download to use on our machine.

We can see the model name, the file size of the model, a button to download the model, and a carat to toggle more details for a given model. Like these:

If you are new to this, I expect these model names to be very confusing. The LLM field moves very fast and has evolved language conventions on the fly which can appear impenetrable to the unfamiliar (don’t make me tap the sign!).

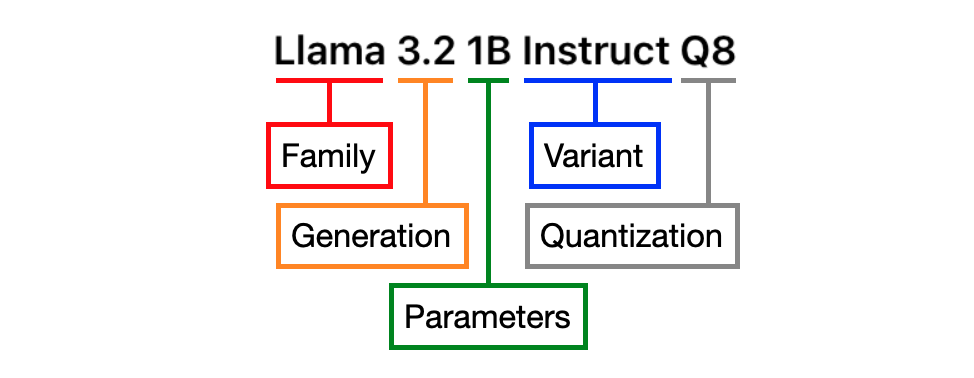

But we can clear this up. You don’t need to understand the following to run a local model, but knowing the language conventions here will help demystify the domain.

Let’s go from left to right:

- Family: Models come in families, which helps you group them by the teams that make them and their intended use (usually). Think of this as the model’s brand name. Here, it’s “Llama”, a family of open models produced by Meta.

- Generation: The generation number is like a version number. A larger number means a more recent model, with the value to the left of the decimal indicating major generations. The number to the right of the decimal might indicate an incremental update or signify a variant for a specific use case. Here we’re looking at 3.2, a generation of Llama models which are smaller sized and designed to run “at the edge” (aka, on a device like a phone or PC, not a remote server).

- Parameters: As I said, models are essentially collections of probabilities. The parameter count is the number of probabilities contained in a given model. This count loosely correlates to a model’s performance (though much less so these days). A model with more parameters will require a more powerful computer to run. Parameter count also correlates with the amount of space a model takes up on your computer. This model has 1 billion parameters and clocks in at 1.23GB. There is a 3 billion parameter option in the Llama 3.2 generation as well, weighing in at 3.19GB.

- Variant: Different models are built for different purposes. The variant describes the task for which a model is built or tuned. This model is made to follow instructions, hence, “Instruct.” You will also see models with “Chat” or “Code” (both self-explanatory). “Base” we tend to see less of these days, but it refers to models that have yet to be tuned for a specific task.

- Quantization: Quantization is a form of model compression. We keep the same number of parameters, but we reduce the details of each. In this case, we’re converting the numbers representing the probabilities in the model from highly detailed numbers with plenty of decimal places to 8-bit integers: whole numbers between -128 and 127. The “Q8” here says the weights in the model have been converted to 8-bit integers. We’re saving plenty of space in exchange for some potential model wonkiness during usage.

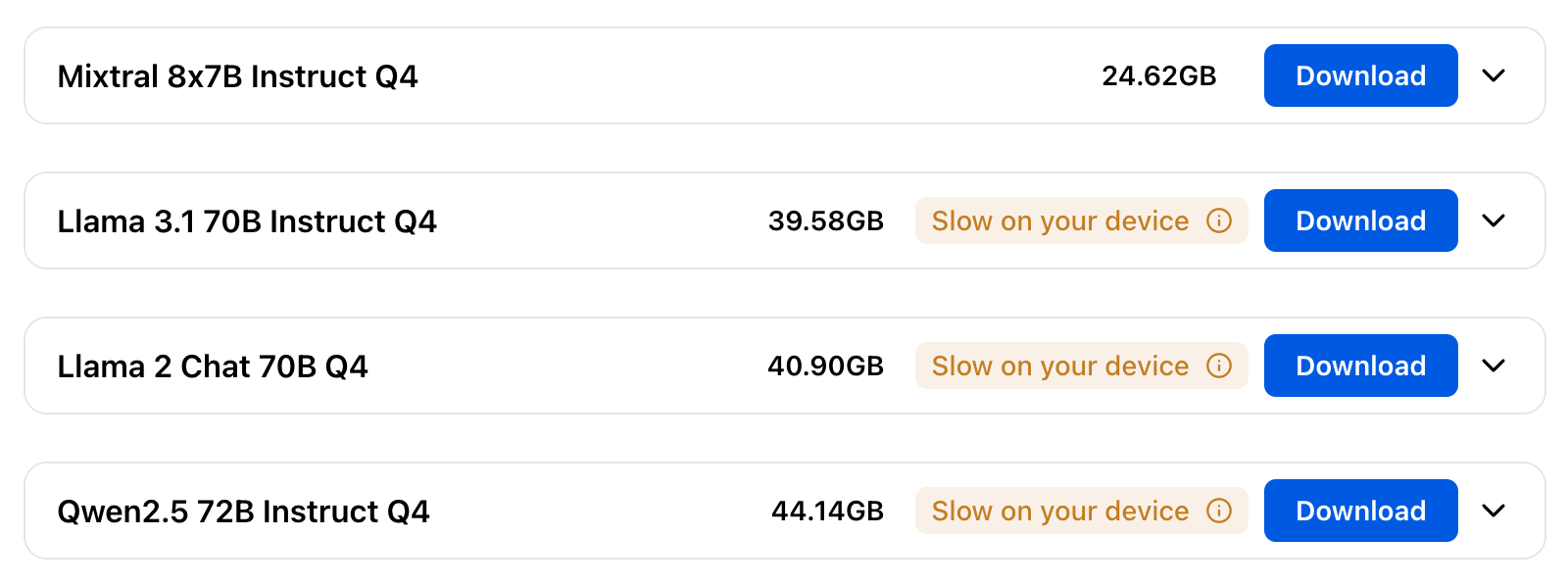

Don’t worry too much about the quantization notation – you don’t need to know it. Jan very helpfully provides us with warnings if a model won’t run well on our machines:

When getting started, feel free to stick with the model recommendations I list below. But if you want to explore, download and try anything that can run on your machine. If it’s slow, non-sensical, or you just don’t like it: delete it and move on.

The models listed here are curated by Jan. But Jan can run any text model hosted on Hugging Face (a website for sharing datasets and machine learning models) in the GGUF format (a file format for sharing models). And there’s plenty of them.

But let’s put a pin in this.

For now, go back to Jan’s model hub and hit the “Download” button for “Llama 3.2 3B Instruct Q8”. If Jan says this model will be, “Slow on your device,” download “Llama 3.2 1B Instruct Q8”. It’s smaller, but still great for its size. Come back when the download is complete.

Chatting with Jan

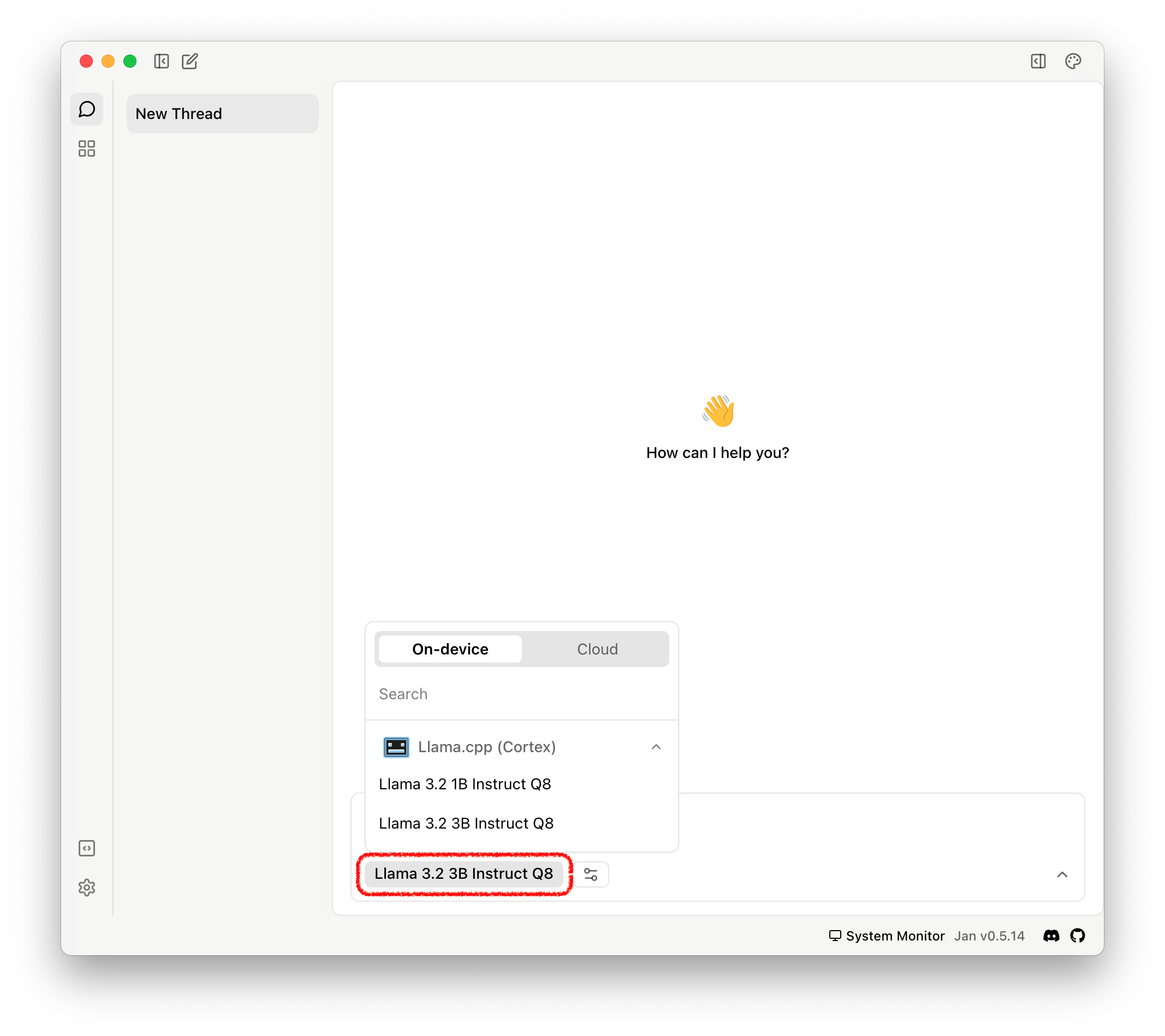

When Llama 3.2 is downloaded, switch back to the chat pane by clicking the speech bubble in the upper-left. In the text field at the bottom, there’s a model selector. Check to make sure it’s set to the model you just downloaded:

As you download more models, you can switch between them here.

Typing text in the text field and hitting enter creates a new thread (just like ChatGPT or Claude).

Here’s what the 3B parameter model says when we ask, “Why might someone want to run a LLM on their laptop, rather than using a cloud provider?”

While running a Large Language Model (LLM) on a cloud provider can be convenient and scalable, there are some reasons why someone might prefer to run an LLM on their own laptop:

1. **Cost savings**: Cloud providers charge by the hour or usage, which can add up quickly. Running an LLM locally can save money in the long run, especially if you only need to use it occasionally.

2. **Data ownership and control**: When you host your LLM on a cloud provider, you may have limited control over how your data is stored, processed, and shared. Running it locally ensures that you maintain full control over your data and can ensure its privacy and security.

3. **Customization and flexibility**: By running an LLM locally, you can customize the environment to suit your specific needs without relying on cloud provider configurations or APIs. This can be particularly useful for research or development purposes where you need to experiment with different models or hyperparameters.

4. **Faster training times**: Training a large language model can take significant time and computational resources. Running it locally means that you don't have to worry about network latency, API timeouts, or other cloud-related issues that might slow down the training process.

5. **Offline access**: With a local LLM, you can work on projects without relying on an internet connection, making it ideal for situations where connectivity is limited or unreliable.

6. **Reduced dependency on cloud providers**: By running your own LLM locally, you reduce your dependence on cloud providers and their infrastructure. This can be beneficial if you're concerned about the stability of cloud services or prefer to maintain a more decentralized approach.

7. **Self-hosting and security**: Running an LLM locally allows you to implement additional security measures and ensure that your model is not exposed to external attacks or vulnerabilities.

However, it's worth noting that running a large language model on a laptop can be computationally intensive and may require significant resources (e.g., GPU power). If you're planning to run a large-scale LLM, cloud providers often offer more suitable solutions with optimized infrastructure and economies of scale.

And here’s the 1B parameter model response to the same question:

Running a Large Language Model (LLM) on a laptop can be beneficial for:

1. **Small projects or proof-of-concepts**: For smaller tasks, like experimenting with different models or hyperparameters, running an LLM locally might be sufficient.

2. **Research and development**: Researchers may need to run small-scale experiments or prototype their ideas without being tied to cloud infrastructure.

3. **Personal use cases**: Individuals who only occasionally work on language-related projects can benefit from local hosting, as it provides a cost-effective alternative to cloud providers.

However, running an LLM on a laptop has some limitations:

1. **Limited scalability**: As the model size or complexity increases, local hosting may become impractical due to computational constraints.

2. **Data storage and processing**: Running an LLM locally requires significant storage space for data and intermediate results, which can be challenging in laptops with limited disk capacity.

3. **Network latency and security concerns**: Local connections can introduce latency and compromise the model's security, especially if you're handling sensitive or proprietary data.

To overcome these limitations, researchers and developers often use:

1. **Cloud-based distributed training**: Training large models on a cloud provider, which allows for scalability and parallel processing.

2. **Serverless computing**: Using serverless platforms that provide a pay-as-you-go pricing model, enabling users to scale up or down as needed.

3. **Edge computing**: Deploying computations closer to the source of data, reducing latency and improving security.

Ultimately, whether running an LLM on a laptop or cloud provider depends on your specific needs, project requirements, and preferences.

Both are pretty good for such tiny models! The 3B-sized Llama does a better job, sticking to the question and providing numerous reasons. But this comes with a speed cost: on my Mac with 64GB of RAM, Llama 3B produces ~50 words-per-minute while Llama 1B produces ~113.

Figuring out which model is right for you takes experimentation. But I have a few recommendations to get you started.

Recommended Models

Unless otherwise noted, you can download these models in Jan by copy-and-pasting the URL in the model name link.

For people with (at least) a Mac with 32GB of RAM or an NVIDIA RTX 4090:

Mistral-Small-24B-Instruct is a bit of a revelation. While there have been open GPT-4o class models that fit on laptops (Llama 3.3 comes to mind), Mistral-Small is the first one I’ve used whose speed is comparable as well. For the last week, I’ve been using it as my first stop – before Claude or ChatGPT – and it’s performed admirably. Rarely do I need to try a hosted model. It’s that good.

If you can run the “Q4” version (it’s the 13.35GB option in Jan, when you paste in the link), I strongly recommend you do. This is the model that inspired me to write this post. Local models are good enough for non-nerds to start using them.

Also good: Microsoft’s Phi-4 model is a bit smaller (~8GB when you select the “Q4” quantized one) but also excellent. It’s great at rephrasing, general knowledge, and light reasoning. It’s designed to be a one-shot model (basically, it outputs more detail for a single question and isn’t designed for follow-ups), and excels as a primer for most subjects.

For people who want to see a model “reason”:

Yeah, yeah, yeah…let’s get to DeepSeek.

DeepSeek is very good, but you’re not likely going to be able to run the original model on your machine. However, you likely can run one of the distilled models the DeepSeek team prepared. Distillation is a strategy to create lightweight versions of large language models that are both efficient and effective. A smaller model ‘learns’ by reviewing the output from a larger model.

In this case, DeepSeek R1 was used to train Qwen 2.5, an excellent set of smaller models, how to reason. (DeepSeek also distilled Llama models) Getting this distilled model up and running in Jan requires an extra step, but the results are well worth it.

First, paste the DeepSeek-R1-Distill-Qwen14B model URL into Jan’s model hub search box. If you’re on Mac, grab the “Q4_1” version, provided you can run it. On Windows, grab the “Q4-K_M”. (If either of those are flagged as not being able to run on your machine, try the 7B version. It’s about ~5GB.)

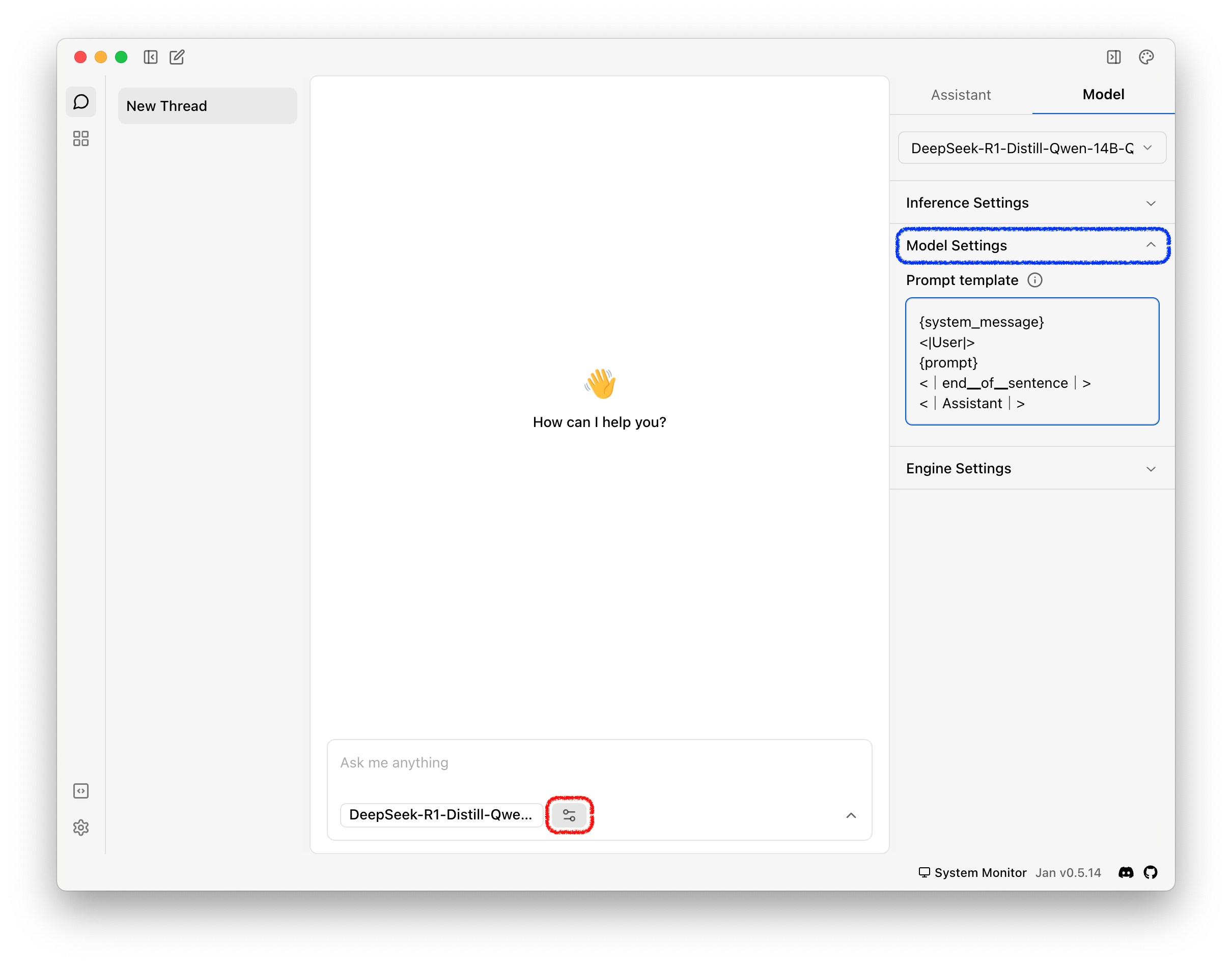

Once the model downloads, click the “use” button and return to the chat window. Click the little slider icon next to the model name (marked in red, below). This toggles the thread’s settings. Toggle the “Model Settings” dropdown (marked in blue) so that the “Prompt template” is visible.

Your prompt template won’t look like the one in the image above. But we’re going to change that.. Paste the following in the prompt template field, replacing its current contents:

{system_message}

<|User|>

{prompt}

<|end▁of▁sentence|>

<|Assistant|>

Think of prompt templates as wrappers that format the text you enter into a format the model expects. Templates can vary, model by model. Thankfully, Jan will take care of most of this for you if you stick to their model library.

This change we’ve made, though, will let you see this model “think”. For example, here’s how it replies to our question about why one might want to use local LLMs. The reasoning is bracketed by <think> and </think> tokens and prefaces the final answer.

Why Bother?

There are giant, leading LLMs available for cheap or free. Why should one bother with setting up a chatbot on their PC?

Llama 3.2 3B already answered this for us, but…

- It’s free: These models work with the PC you have and require no subscriptions. Your usage is only limited by the speed of the model.

- It’s 100% privacy-safe: None of your questions or answers leave your PC. Go ahead, turn off your WiFi and start prompting – everything works perfectly.

- It works offline: The first time I used a local model to help with a coding task while flying on an airplane without WiFi, it felt like magic. There’s something crazy about the amount of knowledge these models condense into a handful of gigabytes.

- It’s customizable: We only scratched the surface here by changing our prompt template. But unfold the “Inference Settings” tab and take a look at the levers waiting to be pulled. Discussing these all is beyond the scope of this article, but here’s a quick tip: the “Temperature” setting effectively controls how much randomness is added during inference. Try setting it to each extreme and see how it changes your responses.

- It’s educational: This is the main reason you should bother with local LLMs. Merely grabbing a few models and trying them out demystifies the field. This exercise is an antidote to the constant hype the AI industry fosters. By getting your hands just slightly dirty, you’ll start to understand the real-world trajectory of these things. And hey, maybe the next DeepSeek won’t be so surprising when it lands.

So much of the coverage around LLMs focuses on raising the ceiling: the improved capabilities of the largest models. But beneath this noise the floor is being raised. There’s been incredible progress: the capabilities of models you can run on moderately powered laptops perform as well as the largest models from this time last year. It’s time to try a local model.