MCPs are APIs for LLMs

Everyone is suddenly talking about Model Context Protocol (MCP), without explaining what it is. On Google Trends, searches for, “What is MCP?” are rising nearly as fast as those for “Model Context Protocol”.

Here’s the headline: MCP servers are APIs LLMs can use.

Released by Anthropic last November, the Model Context Protocol is described as, “a new standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments.” But even that description is a bit jargony.

Here’s the simple version:

- An MCP server exposes a bunch of end points, like any other API server, but it must have end points that list all the available functions on a server in a standard way an MCP client can understand.

- MCP clients (usually LLM-powered), like Anthropic’s Claude Desktop, can then be connected to MCP servers and immediately know what tools are available for them to use.

- LLMs connected to MCPs can now call MCP servers using the specs provided by the API.

That’s it! It’s incredible simple, a standard to enable the Web 2.0 era for LLM applications, giving models plug-and-play access to tools, data, and prompt libraries.

If you want to try MCP (and you’re on a Mac), do this:

- Download Claude Desktop. Install it, start it up, try it out.

- Download iMCP, a MacOS app that connects your Apple calendar, contacts, location, messages, reminders, and weather to an MCP server it manages. (You can turn these on one by one. If you’re cautious about exposing sensitive data, just turn on weather and location for the demo. But these are trusted devs who’ve been active in the indie Mac development community forever). Here’s a direct download link

- Follow the instructions described on the iMCP page then launch (or relauch) Claude Desktop.

After clicking through a few privacy and access alerts, you’ll see a little hammer icon in the bottom right of your Claude text field. If you drop it down, you’ll see each tool iMCP provides, which Claude Desktop obtained by calling a “list” function each MCP server must provide.

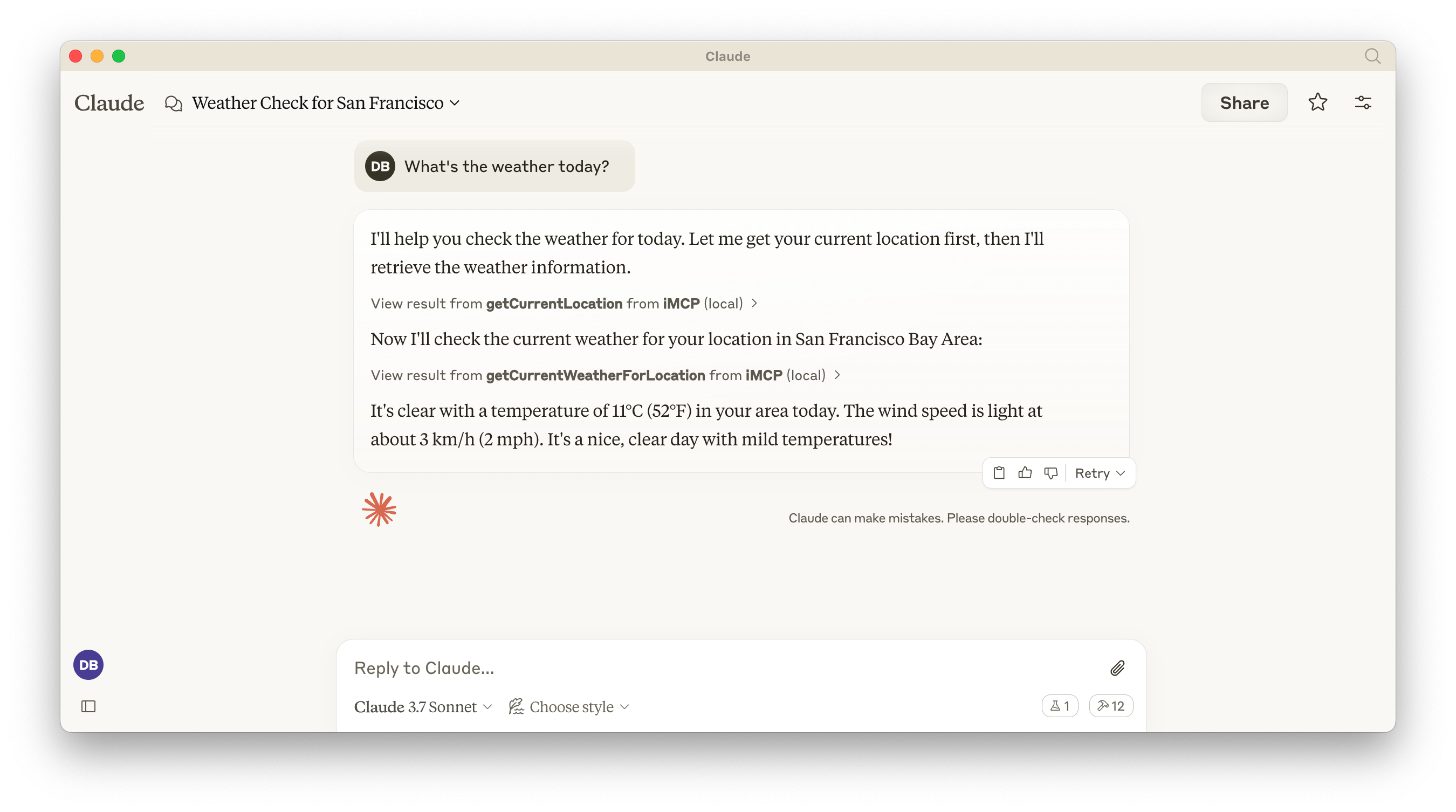

Now, ask Claude, “What’s the weather today?” A few more permission dialogues will arise and you’ll see Claude calling iMCP to get your location and weather.

You can build whatever type of MCP server you want, and people do. There are servers that allow LLMs to access your Gmail, chat on Discord, control Ableton Live, search Notion, access databases, and so much more. Unfortunately, most of these servers have relatively tedious set up processes involving Docker and (usually) obtaining developer access keys from whatever service is being accessed. There’s been some steps recently to manage and run servers via the command line, but I have yet to see an easy-to-use GUI app.

But it’s early days. MCP is just 113 days old, but the amount of experimentation and momentum is incredibly high.

I predict most companies with an AI and/or an API strategy will launch an MCP this year. If OpenAI adopts MCP in its clients, make it 6 months.