The Dynamic Between Domain Experts & Developers Has Shifted

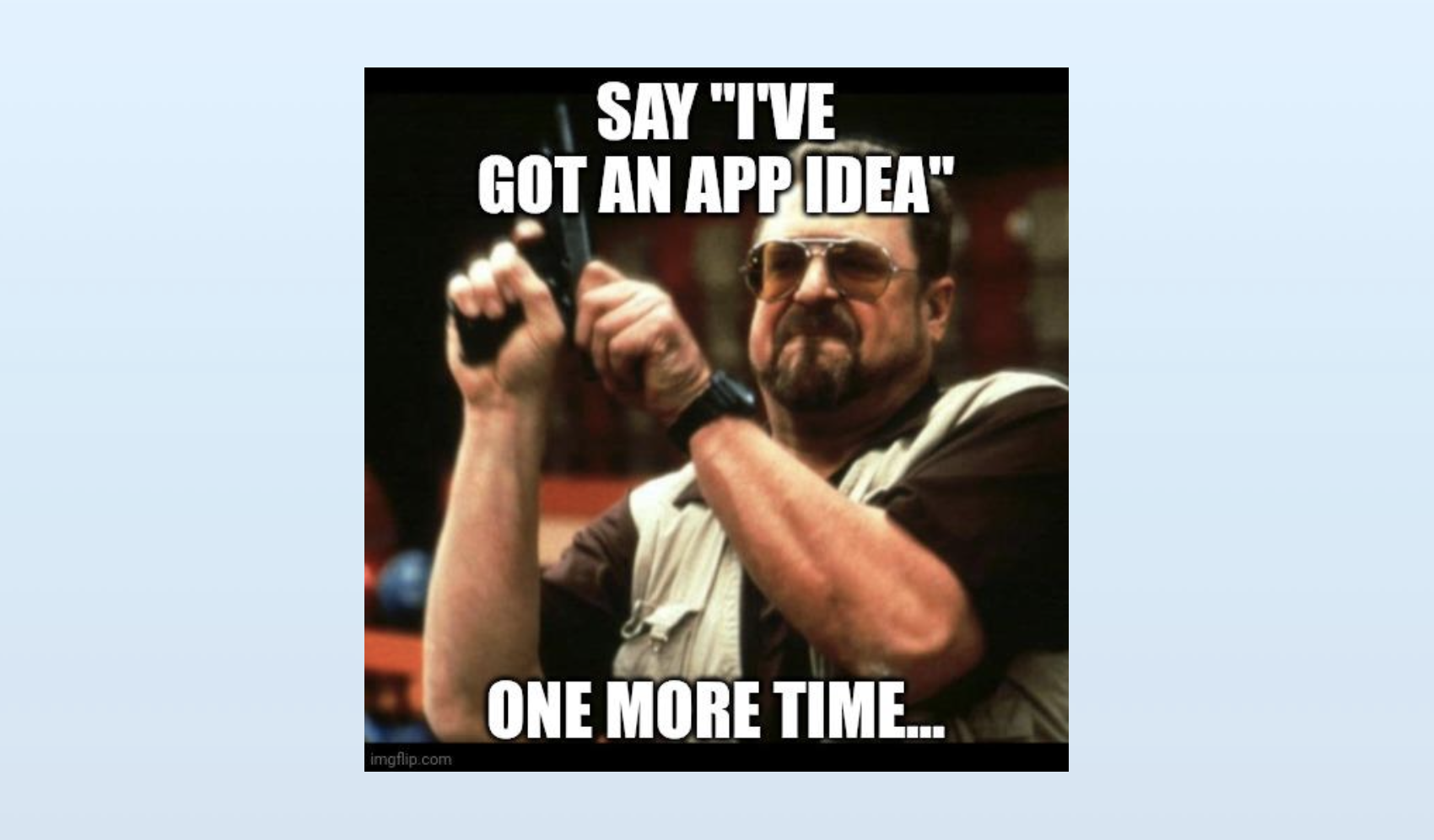

During the peak of mobile app madness, iOS and Android developers would often find themselves cornered by friends, relatives, and random people at parties.

“I’ve got a great idea for an app…”

More often than not, this dreaded sentence would be followed by a hard sell when the developer didn’t display adequate enthusiasm. If the developer didn’t act fast and feign the exact right level of approval — enough to communicate they ‘got’ the idea but not so much that they’d be asked to build it — the idea guy would advance onto hashing out NDAs, equity allocations, and asking when coding can start.

Recently, I’ve noticed the AI era is a bit different. The balance of power has shifted. Builders need domain experts as much as they need them.

You can no longer simply copy an app model with a few improvements or obsess over user feedback as you sharpen your prototype towards product-market fit.

To build a differentiated AI product you need training data and examples curated by a domain expert.

You need experts to evaluate your prompt, to speak the plain language to the model, and hammer out the edge cases based on the failures we’ve seen. Sure, programmers still will code up the UI, data pipelines, dashboards, API integrations, and more. But you need domain experts to bootstrap your prompts and evaluate your failures.

Recently, the always excellent Hamel Husain put his finger on this changing dynamic in an article on improving AI products:

I recently worked with an education startup building an interactive learning platform with LLMs. Their product manager, a learning design expert, would create detailed PowerPoint decks explaining pedagogical principles and example dialogues. She’d present these to the engineering team, who would then translate her expertise into prompts.

But here’s the thing: prompts are just English. Having a learning expert communicate teaching principles through PowerPoint, only for engineers to translate that back into English prompts, created unnecessary friction. The most successful teams flip this model by giving domain experts tools to write and iterate on prompts directly.

Hamel sees this pattern, “with lawyers at legal tech companies, psychologists at mental health startups, and doctors at healthcare firms.”

I too have seen this. The first generation of AI-powered products (often called “AI Wrapper” apps, because they “just” are wrapped around an LLM API) were quickly brought to market by small teams of engineers, picking off the low-hanging problems. But today, I’m seeing teams of domain experts wading into the field, hiring a programmer or two to handle the implementation, while the experts themselves provide the prompts, data labeling, and evaluations.

For these companies, the coding is commodified but the domain expertise is the differentiator.

This morning, OpenAI underscored this point by launching a “Pioneers Program”. TechCrunch summarizes the program:

Through the Pioneers Program, OpenAI hopes to create benchmarks for specific domains like legal, finance, insurance, healthcare, and accounting. The lab says that, in the coming months, it’ll work with “multiple companies” to design tailored benchmarks and eventually share those benchmarks publicly, along with “industry-specific” evaluations.

To understand model performance and in-turn guide model development, OpenAI is turning to outside domain experts.

The tables have turned. The AI leader is now cornering the lawyers and doctors at the parties.

“I’ve got a great idea for an eval…”